tagtog is a multi-user tool. Collaborate with other users to annotate faster and improve the quality of your annotations.

It supports different roles as annotator. Each user can annotate their own copy of the text, facilitating the review process and measurement of inter-annotator agreement (IAA).

Roles

tagtog comes with a set of predefined user roles. Here you find a summary description of the roles. In the permissions section just below you will find a detailed list of the permissions for these roles.

| Role | Description |

|---|---|

admin |

Usually they set the project up and track it. Can read all users’ annotations and can edit them. They can edit master’s and their own annotations. Moreover, they can edit all project’s settings. All permissions are active for this role. By default, the user that creates a project becomes its admin. More details. |

reviewer |

They review and approve the annotators’ annotations. They can read all users’ annotations and can edit them. They can edit master’s and their own annotations. Moreover, they can edit some settings, see the project metrics, and use the API. More details. |

supercurator |

In addition to the regular annotator routine, they can perform some privileged tasks. They can edit master's and their own annotations. They can read the settings of the project, see the project metrics, and use the API. More details. |

curator |

Regular annotators. They can edit their own annotations. They cannot edit master's annotations, but can export master into their annotations. They cannot see the metrics of the project nor use the API. More details. |

reader |

Perfect for those users that only want to consume the production annotations and check the progress. Can only read master's annotations. They can see the metrics of the project. More details. |

Create custom roles

Depending on your plan, you can create custom roles and define their permissions. Read how to manage and create custom roles.

Permissions

tagtog role-based access control helps you manage what users can do in a project, and what areas they have access to. You can find below the permissions available in tagtog. Each role has a set of permissions associated.

| Realm | Component | Permission | Description | Reader | Curator | Supercurator | Reviewer | Admin |

|---|---|---|---|---|---|---|---|---|

| settings | Guidelines | canReadGuidelinesConf |

Read access for Settings - Guidelines | ✅ | ✅ | ✅ | ✅ | ✅ |

canEditGuidelinesConf |

Write access for Settings - Guidelines | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Annotation Tasks | canReadAnnTasksConf |

Read access for all annotation tasks, namely: Document Labels, Entities, Dictionaries, Entity Labels, and Relations | ✅ | ✅ | ✅ | ✅ | ✅ | |

canEditAnnTasksConf |

Write access for all annotation tasks, namely: Document Labels, Entities, Dictionaries, Entity Labels, and Relations | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Requirements | canReadRequirementsConf |

Read access for Settings - Requirements | ✅ | ✅ | ✅ | ✅ | ✅ | |

canEditRequirementsConf |

Write access for Settings - Requirements | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Annotatables | canReadAnnotatablesConf |

Read access for Settings - Annotatables | ✅ | ✅ | ✅ | ✅ | ✅ | |

canEditAnnotatablesConf |

Write access for Settings - Annotatables | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Annotation | canReadAnnotationsConf |

Read access for Settings - Annotations | ✅ | ✅ | ✅ | ✅ | ✅ | |

canEditAnnotationsConf |

Write access for Settings - Annotations | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Webhooks | canReadWebhooksConf |

Read access for Settings - Webhooks | ❌ | ❌ | ❌ | ✅ | ✅ | |

canEditWebhooksConf |

Write access for Settings - Webhooks | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| Members | canReadMembersConf |

Read access for Settings - Members | ❌ | ❌ | ❌ | ✅ | ✅ | |

canEditMembersConf |

Write access for Settings - Members | ❌ | ❌ | ❌ | ❌ | ✅ | ||

| Admin | canReadAdminConf |

Read access for Settings - Admin | ❌ | ❌ | ❌ | ✅ | ✅ | |

canEditAdminConf |

Write access for Settings - Admin | ❌ | ❌ | ❌ | ❌ | ✅ | ||

| documents | Content | canCreate |

Rights to import documents to the project | ❌ | ❌ | ✅ | ✅ | ✅ |

canDelete |

Rights to remove documents from the project | ❌ | ❌ | ✅ | ✅ | ✅ | ||

| Own version | canEditSelf |

Write access to the own version of the annotations | ❌ | ✅ | ✅ | ✅ | ✅ | |

| Master version | canReadMaster |

Read access to the master version of the annotations | ✅ | ✅ | ✅ | ✅ | ✅ | |

canEditMaster |

Write access for the master version of the annotations (ground truth) | ❌ | ❌ | ✅ | ✅ | ✅ | ||

| Others' versions | canReadOthers |

Read access to every project member's versions of the annotations | ❌ | ❌ | ❌ | ✅ | ✅ | |

canEditOthers |

Write access to every project member's versions of the annotations | ❌ | ❌ | ❌ | ✅ | ✅ | ||

| folders | canCreate |

Rights to create folders | ❌ | ❌ | ✅ | ✅ | ✅ | |

canUpdate |

Rights to rename existing folders | ❌ | ❌ | ✅ | ✅ | ✅ | ||

canDelete |

Rights to delete existing folders | ❌ | ❌ | ✅ | ✅ | ✅ | ||

| dictionaries | canCreateItems |

Rights to add items to the dictionaries using the editor | ❌ | ❌ | ✅ | ✅ | ✅ | |

| metrics | canRead |

Read access to the metrics of the project (metrics tab) or the metrics for annotation tasks in a document (e.g. IAA) | ✅ | ❌ | ✅ | ✅ | ✅ | |

| API | canUse |

Users with this permission can use the API. Users with this permission can see the output formats in the UI | ❌ | ❌ | ✅ | ✅ | ✅ |

Teams

tagtog comes with a feature to define teams of users to simplify and speed up your collaboration. It allows you to group users into teams so you don't have to add each user to a project separately. It can be very handy especially when you have many users in the system. Then you can add whole teams to projects, delete them from projects, define permission for all team members and so on. For now, it's only available for OnPremises. For more details, please go here.

Annotation versions

Each user (with a role with the permission canEditSelf) has an independent version of the annotations for each single document. For instance, UserA could have 20 entities; UserB could have 5 different entities on the same exact document. In addition, each document has a master version which is usually treated as the final/official version (ground truth).

Any of these versions are independent and can be confirmed separately.

Annotation flows & Task Distribution

There are different ways you can organize your annotation tasks. These are the most common:

Annotators annotate directly on the master version (ground truth). No review.

This is the simplest flow and there is no review step. Make this choice if you are working alone, or if you trust your annotators' annotations or if time is a constraint. This is the project's default. Here, for simplicity, we explain the flow using the default roles.

1Add users to your project. As a project's admin, go to Settings → Members to add members to your project.

2Create clear guidelines. Here, the admin or reviewer writes what is to be annotated and which type of annotations to use. Clear and complete guidelines are key to align all project members.

3Import text. The default roles admin, supercurator, and reviewer can import the documents to be annotated by the group. Any project member can see these documents.

4Distribute documents among annotators. Either let users annotate non-yet-confirmed documents, or otherwise, for example, manually assign document ids to each user.

5The group starts annotating. Each user annotates only the master version of the assigned documents. Once a document is annotated, the user marks the annotations as completed by clicking the Confirm button. For example, admin or reviewer can track the progress in the metrics of the project.

Documents are automatically distributed; one annotator per document

Make this choice if the annotation task is simple or if time is a constraint. If you assign each document to only one annotator, the quality of the annotations depends on the assigned user.

1Add users to your project. As a project's admin, go to Settings → Members to add members to your project.

2Create clear guidelines. Here, the admin or reviewer writes what is to be annotated and which type of annotations to use. Clear and complete guidelines are key to align all project members.

3Distribute documents among annotators. The project admin goes to Settings → Members, enables task distribution, selects who to distribute documents to, and selects 1 annotator per document.

4Import text. The default roles admin, supercurator, and reviewer can import the documents to be annotated by the group. Any project member can see these documents. In addition, each annotator will see a TODO list with the documents assigned to them, and not confirmed by them yet.

5The group starts annotating. Users annotate their version of the annotations for the documents assigned. Once completed, users mark their version as completed by clicking on the Confirm button.

6Review. Reviewer (or Admin) checks which documents are ready for review (via GUI in the Document list, in Metrics metrics panel > documents, or by using a search query). Reviewer moves the user's annotations to the master version (ground truth), review, and make the required changes. Reviewers should click on the Confirm button in the master version to indicate that the review is completed and the document is ready for production.

Documents are automatically distributed; multiple annotators per document

This flow is ideal for those projects requiring high-quality annotations and complex annotation tasks (specific skills required, divergent interpretations, etc.).

1Add users to your project. As a project's admin, go to Settings → Members to add members to your project.

2Create clear guidelines. Here, the admin or reviewer writes what is to be annotated and which type of annotations to use. Clear and complete guidelines are key to align all project members.

3Distribute documents among annotators. The project admin goes to Settings → Members, enables task distribution, selects who to distribute documents to, and selects 2 or more annotators per document.

4Import text. The default roles admin, supercurator, and reviewer can import the documents to be annotated by the group. Any project member can see these documents. In addition, each annotator will see a TODO list with the documents assigned to them, and not confirmed by them yet.

5The group starts annotating. Users annotate their version of the annotations for the documents assigned. Once completed, users mark their as completed by clicking on the Confirm button.

6Adjudication. Reviewers (or Admins) check which documents are ready for review (via GUI in the Document list, in the metrics panel > documents or via search query). For a document, Reviewers merge the users' annotations (automatic adjudication) to the master version (ground truth). Reviewers review the merged annotations and they should click on the Confirm button in the master version to indicate that the review is completed.

Quality Management

Here you will learn how to track the quality of your project in real time.

IAA (Inter-Annotator Agreement)

The Inter-Annotator Agreement (IAA) gauges the quality of your annotation project, that is the degree of consensus among your annotators. If all your annotators make the same annotations independently, it means your guidelines are clear and your annotations are most likely correct. The higher the IAA, the higher the quality.

In tagtog, each annotator can annotate the same piece of text separately. The percentage agreement is measured as soon as two different confirmed✅ annotation versions for a same document exist; i.e., at least one member’s and master annotations are confirmed, or 2 or more members’ annotations are confirmed. These scores are calculated automatically in tagtog for you (IAA calculation methods). You can add members to your project at Settings > Members.

If your project has activated Automatic Task Distribution, tagtog will distribute the same documents to a set of annotators to calculate the IAA right away. Even if you set the task distribution as one annotator per document (each document is assigned to a different annotator), tagtog automatically tries to allocate 5% of your documents to two annotators to calculate IAA. Otherwise, if you want to control this process manually, the only requirement is that more than one annotator annotates the same documents. Using task distribution, you can manually assign a document to a specific set of annotators - More information.

IAA at the project level

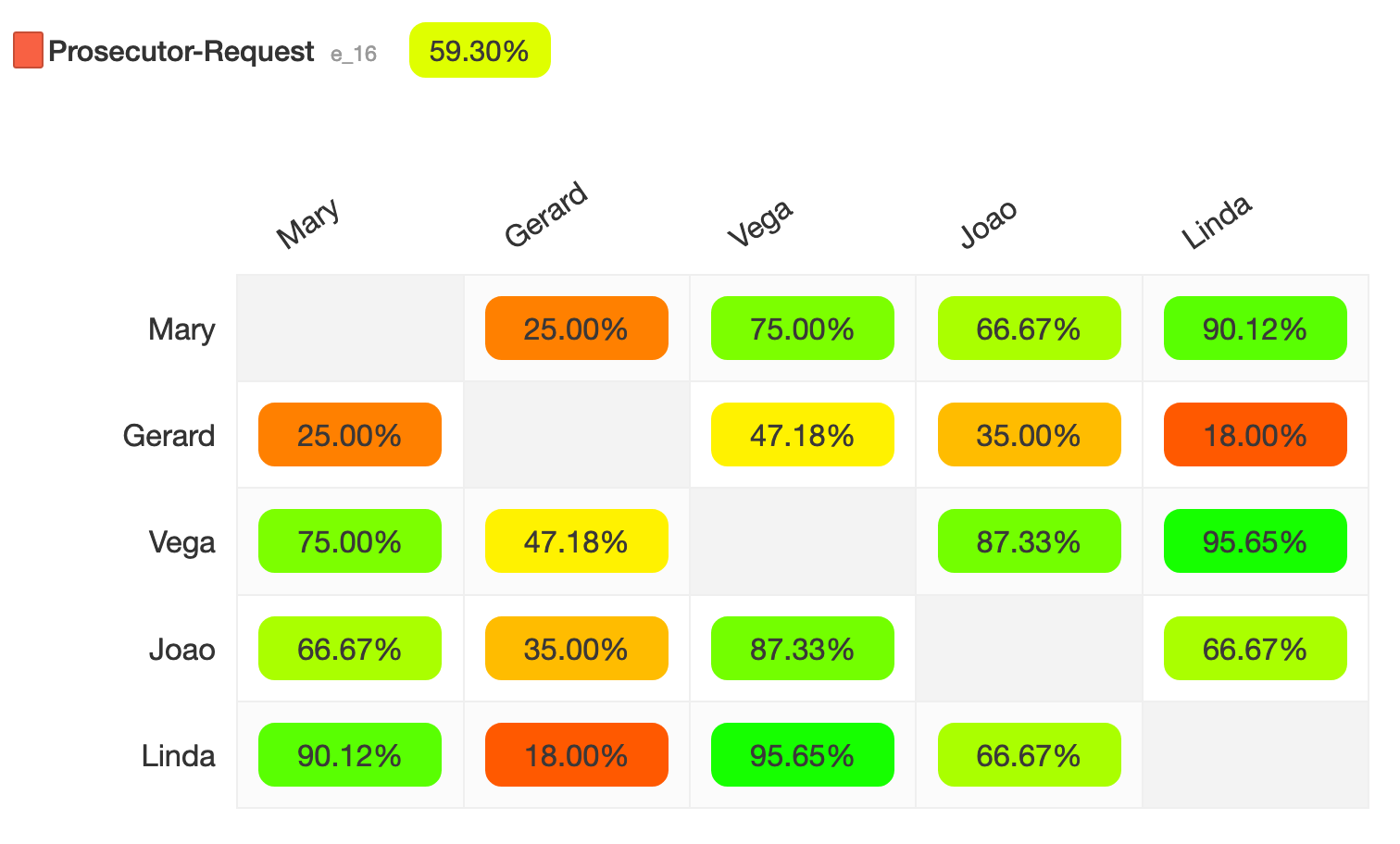

To go to the IAA results, open your project and click on the Metrics section. Results are split into annotation types (entity types, entity labels, document labels, normalizations and relations). Each annotation type is divided into annotation tasks (e.g. Entity types: Entity type 1, Entity type 2; Document labels: document label 1, document label 2, etc.). For each annotation task, scores are displayed as a matrix. Each cell represents the agreement pair for two annotators, being 100% the maximum level of agreement and 0% the minimum.

The agreement percentage near the title of each annotation task represents the average agreement for this annotation task.

Inter-annotator agreement matrix. It contains the scores between pairs of users. For example, Vega and Joao agree on the 87% of the cases. Vega and Gerard on the 47%. This visualization provides an overview of the agreement among annotators. It also helps find weak spots. In this example we can see how Gerard is not aligned with the rest of annotators (25%, 47%, 35%, 18%). A training might be required to have him aligned with the guidelines and the rest of the team. On the top left we find the annotation task name, id and the agreement average (59,30%).

IAA at the document level

tagtog would calculate the agreement if at least two users confirmed their document version. The values are shown on the sidebar, near each annotation task. Each value represents the agreement of the annotation/s for this task in a specific document. The agreement is calculated only using the annotations from confirmed versions.

Only those roles with the permission to read the project metrics (metrics > canRead) can see the IAA values. This is ideal for reviewers, where they need to assess the quality of the annotations before accepting them. Suppose a user’s role has this permission (e.g., default roles such as supercurator, but not curator). In that case, this user also sees the IAA values at the document level for their annotations. While this can increase the annotation bias in some scenarios, it also creates an exciting use case where the users can understand their annotations’ quality and spot not intended errors.

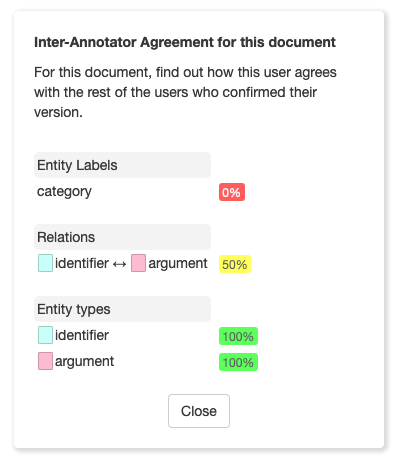

If you click on any of these IAA values, a modal dialog shows up with the list of annotation tasks and the respective IAA values. In this way, you see all the information in one place, and it is easier to compare and identify problems.

To easily understand the IAA values, imagine you see a 70% IAA near to a document label. It means the value selected by this user was also selected by 70% of all users who confirmed this document. You can find a more detailed example below:

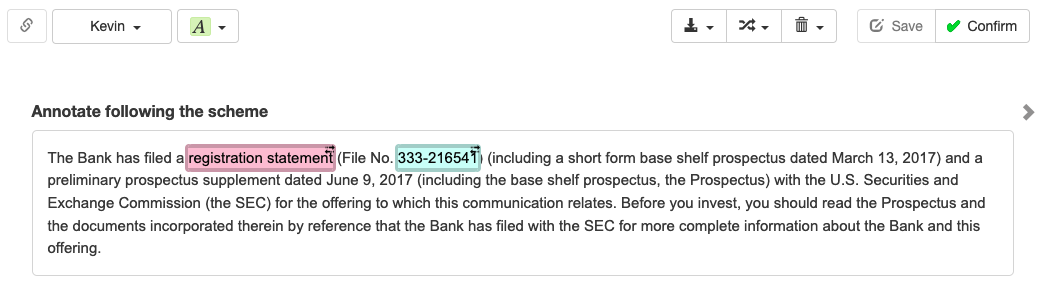

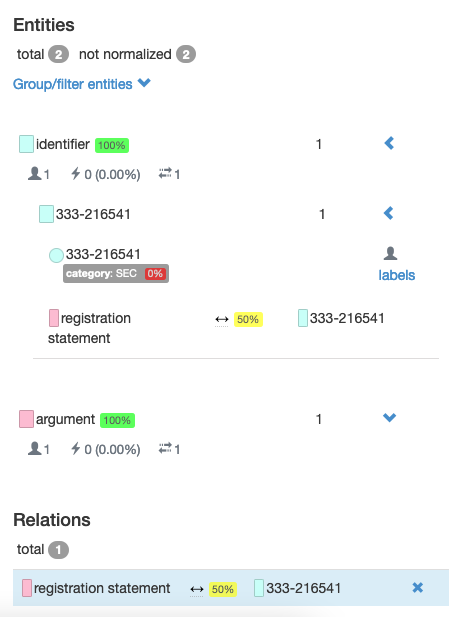

In this example, you see Kevin's annotations.

On the sidebar, you find the IAA values for this document. You can observe that Kevin's agreement with the rest of users who already confirmed their version is 100% for the entity types identifier and argument. However, the value selected for the entity label category ('SEC') has not been selected by any of the other users (agreement 0%). For the relation specified, only half of the users agree with him (agreement: 50%). This information should give you an oversight of Kevin's annotations quality for this specific document

By clicking on any of the IAA values, a modal dialog shows a summary with all the annotation tasks and their respective IAA for this specific document

What can I do if IAA is low?

There may be several reasons why your annotators do not agree on the annotation tasks. It is important to mitigate these risks as soon as possible by identifying the causes. If you find such an scenario we recommend you to review the following:

Guidelines are key. If you have a large group of annotators not agreeing on a specific annotation task, it means your guidelines for this task are not clear enough. Try to provide representative examples for different scenarios, discuss boundary cases and remove ambiguity. Remember you can attach pictures or screenshots to the guidelines.

Be specific. If annotation tasks are too broadly defined or ambiguous, there is room for different interpretations, and eventually disagreement. On the other hand, very rich and granular tasks can be difficult for annotators to annotate accurately. Depending on the scope of your project, find the best trade-off between high specific annotations and affordable annotations.

Test reliability. Before start annotating large amounts of data, it is good to make several assessments with a sample of the data. Once the team members have annotated this data, check the IAA and improve your guidelines or train your team accordingly.

Train. Make sure you train appropriately members joining the annotation project. If you find annotators that do not agree with most of members from the team, check the reasons, make your guidelines evolve and train them further.

Check how heterogeneous is your data. If your data/documents are very different from each other either in complexity or structure, a larger effort would be required to stabilize the agreement. We recommend to split the data into homogeneous groups.

Adjudication (Merging)

When different users annotate the same document, as a result, there are different annotation versions. Adjudication is the process to resolve inconsistencies among these versions before promoting a version to master. In other words, the different annotators’ versions are merged into one (using various strategies). This is also called aggregation.

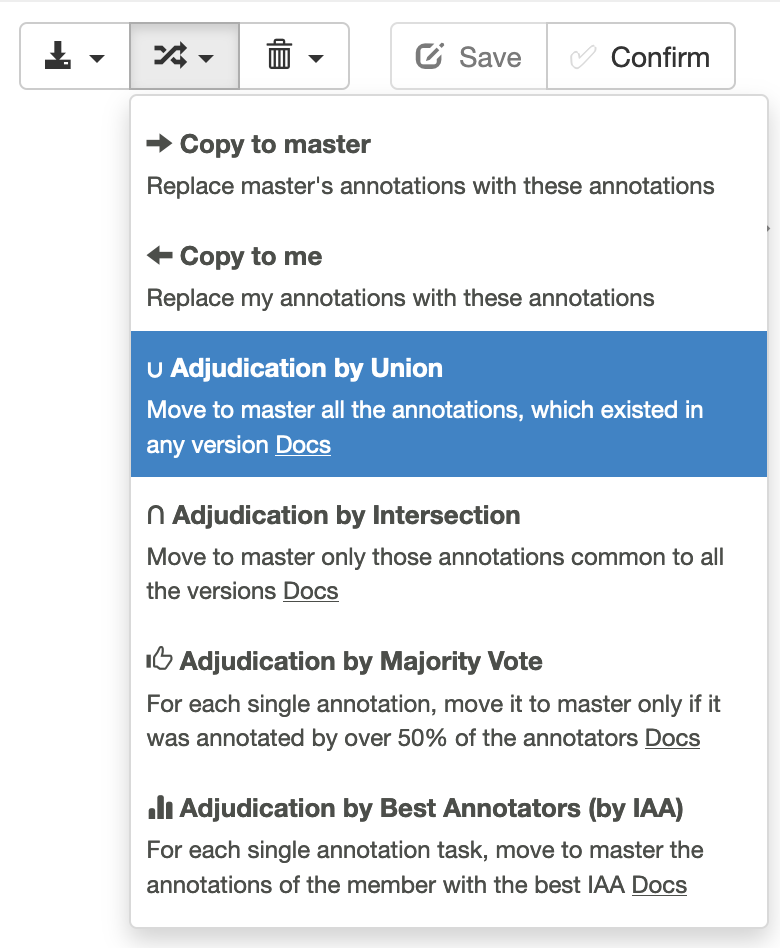

tagtog supports manual adjudication and automatic adjudication. The adjudication methods presented below are available both from the user interface and via the API. For the user interface, in the toolbar of the annotation editor, you find these options under (Manage annotation versions).

Manual & Automatic Adjudication Actions available on the tagtog document editor.

Manual adjudication

A user with the role reviewer or a role with similar permissions (e.g., supercurator) promotes a user version to master using the adjudication option Copy to master. If required, the reviewer can manually change master to ammend any of the user's annotations.

Alternatively, the reviewer can use one of the automatic adjudication methods explained below and then apply the required changes to master.

Automatic adjudication

Based on your quality requirements, you might want to automate to some extent the review of the annotations. In case more than one user is annotating each document, you can use the automatic adjudication methods to obtain a master version by merging all users’ versions for a given document. Notice that a user with the required permissions can still edit master after the adjudication (e.g. role reviewer). Therefore, you can either fully automate the review process or accelerate it by only reviewing master rather than each user version.

Let's explore the different strategies for automatic adjudication.

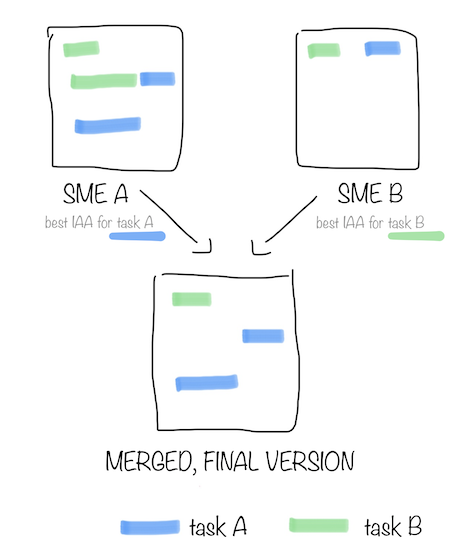

Automatic adjudication based on: IAA

For each single annotation task, this method promotes to master the annotations of the user with the best IAA (using the calculation exact_v1 metric; for all documents).

The goal is to have in master the available-best annotations for each annotation task. That is the reason why this adjudication method is also know as adjudication by Best Annotators

In this example, SME A (Subject-Matter Expert A) has the highest IAA for task A and SME B for task B. The result are the annotations for task A by SME A plus the annotations from task B by SME B

tagtog continuosly computes the IAA values for each annotation task. If there is not enough information to compute the IAA for an specific task, then tagtog, only for that task, promotes to master the annotations of the user with the highest IAA in the project.

If you want to know more about the adjudication process and when it makes sense to use an automatic process, take a look at this blog post: The adjudication process in collaborative annotation

If you want to see a step-by-step example for setting up automatic adjudication, check out this post: Automatic adjudication based on the inter-annotator agreement

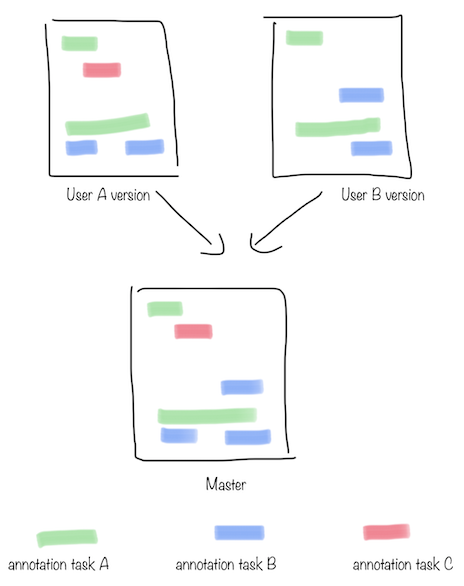

Automatic adjudication by: Union

This method promotes to master all the annotations from all the confirmed users’ versions.

In this example, user A's version and user B's version are merged using the Union method. All the annotations from user A and user B are promoted to master.

If an annotation is repeated in two or more versions, only one of the ocurrences is promoted to master. However, this ocurrence will have different properties in comparison to the original annotation.

Find below how this method generates the resulting master version:

| Component | Description | ann.json location |

|---|---|---|

| Probability | The average of probabilities of all the occurrences. For example, if three users confirmed their annotation version and only two users have this annotation on their version, tagtog will promote the annotation to master with a probability of 2/3. |

|

| User list | It is composed by all the users that have this annotation in their version. |

|

| Entity Labels and Normalizations | If an entity is common to all versions, but not the entity label values or normalizations, the result of Union is one entity with the entity label/normalization value set using the first version’s value. For example, suppose user A has selected the value |

|

| Relations | Each different relation is promoted to |

|

| Document Labels | Value is set using the first version’s value |

|

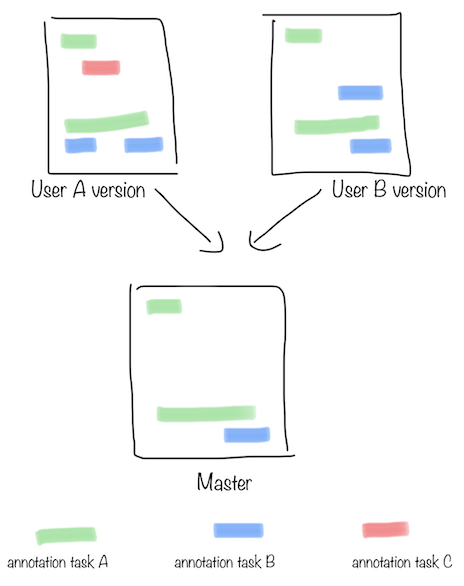

Automatic adjudication by: Intersection

This method promotes to master all the annotations common in all the confirmed users’ versions.

The Intersection method is the strictest adjudication method because to promote an annotation to master, all the users should agree on that annotation. It is recommended for environments where annotations play a critical role and where incorrect annotations might have a considerable impact.

In this example, user A's version and user B's version are merged using the Intersection method. Only the annotations that are in both versions are promoted to master.

Find below how this method generates the resulting master version:

| Component | Description | ann.json location |

|---|---|---|

| Probability | Because the annotations promoted the master are common to all the versions, the probability is always |

|

| User list | Because the annotations promoted the master are common to all the versions, all the users who confirmed their version at the moment of the adjudication are in this list. |

|

| Entity Labels and Normalizations | If an entity is common to all versions, but not the entity label values or normalizations, the result of Intersection is the entity with no entity labels or normalizations set. For example, suppose user A has selected the value |

|

| Relations | Only the relations (and their entities) common to all the versions are promoted to master. If any of the entities composing the relation is not promoted to master for not meeting the adjudication method criteria, then the relation is not promoted. |

|

| Document Labels | Value is only set if all the users agree on the same value. |

|

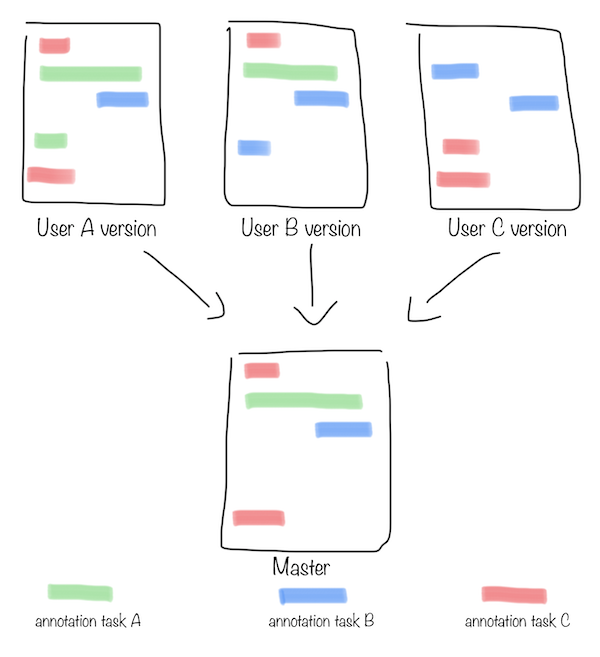

Automatic adjudication by: Majority Vote

For each single annotation, this method promotes it to master only if it was annotated by over 50% of the annotators.

In this example, the versions of user A, user B and user C are merged using the Majority Vote method. Only the annotations that are common in more than 50% (majority) of the versions are promoted to master. For example, the annotation for the task C (red) on the top left has been selected by user A and user B, therefore they form majority (2 out of 3, or over 66%) and this annotation is promoted to master. On the contrary, those annotations that have not been selected by the majority, are not promoted to master.

Find below how this method generates the resulting master version:

| Component | Description | ann.json location |

|---|---|---|

| Probability | tagtog only promotes to master those annotations that more than 50% (majority) of the users have in their version. This means, the probability of any annotation promoted to master will be over |

|

| User list | It is composed by all the users that have this annotation in their version. |

|

| Entity Labels and Normalizations | Suppose an entity is common to all versions, but the values for an entity label or normalization are different. If the same value has been chosen by 50% or less of the users, the Majority Vote method results in the entity with no entity label or normalization set. If more than 50% of the users choose the same value, then the entity label or normalization is set using that value. For example, suppose user A has selected the value |

|

| Relations | Only the relations (and their entities) common to more than 50% of the versions are promoted to master. If any of the entities composing the relation is not promoted to master for not meeting the adjudication method criteria, then the relation is not promoted. |

|

| Document Labels | Value is only set if more than 50% of the users agree on the same value. |

|

Conflict resolution

When you start an annotation project, you will want your annotators to align with your project guidelines. Depending on the project, this might be challenging for the first few iterations when annotators might not adhere to the guidelines or resolve the annotation tasks differently. During this process, we recommend approaching these differences via conflict resolution:

1Upload a set of documents. Each annotator will annotate each of the documents.

2Using the GUI or the API, select automatic adjudication by Union to move all the users’ annotations to master.

3The user taking care of the conflict resolution goes to the master version and filter the annotations by probability. Any probability under 100% will show the annotations that have a conflict, i.e., not all the users agreed on the annotation. For example, if you set the filter to 99%, it will show you all the annotations that less than 99% of the annotators annotated). You probably want to filter for many annotators using a lower probability (e.g., less than 70%).

4Go over each annotation with your team to understand what went wrong. Update the guidelines to avoid this issue in the future.